In this article, we give a brief overview about two media content distribution protocols that rely on multicasting: Multicast ABR (MABR) and Coded Caching. While MABR will be the standard technology for bandwidth-efficient livestreaming over multicast networks, Coded Caching can offer similar benefits for video-on-demand traffic.

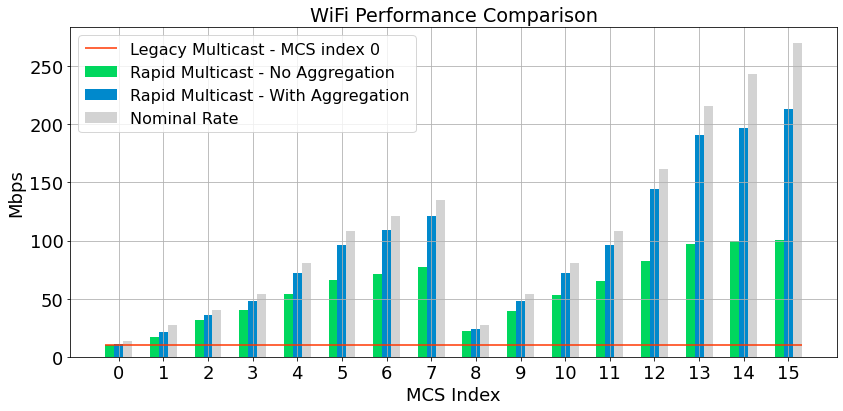

MABR stands for Multicast Adaptive BitRate, hence it combines two major technologies in content distribution that have so far rarely be used jointly: multicasting and adaptive bitrate video transmission.

With adaptive streaming (such as HLS or DASH), video clients adapt their requested video bitrate (and hence quality) to the bandwidth offered by the communication channel. Because it relies on HTTP, it integrates extremely well into the architecture of the web. For broadcast communication channels and popular live streams, individual (unicast) transmission does not scale well. MABR combines those advantages of adaptive streaming with the efficiency of multicast transmission for live streams: It integrates well into the web architecture, but transmits each pieve of content only once per link. Compared to unicast, this can be tremendously more efficient.

Multicasting and video-on-demand? Coded Caching

The bandwidth savings with Multicast ABR are intuitive for live video. But can multicasting reduce the network load of individual video-on-demand services? The two apparent ideas are:

- Pre-caching of popular content via multicast

- Bundling of simultaneous requests in a multicast data carousel

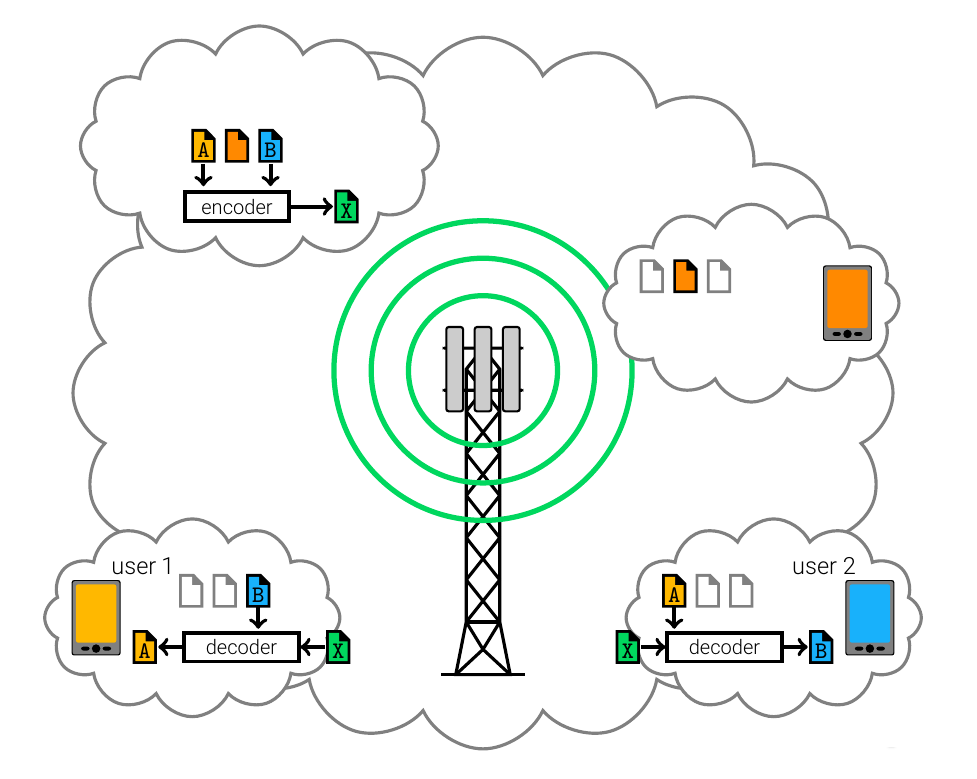

Coded Caching is the most efficient combination of both, caches at the receivers and coded multicast transmission. A tutorial on Coded Caching technology is available at https://cadami.net/coded-caching-blog/

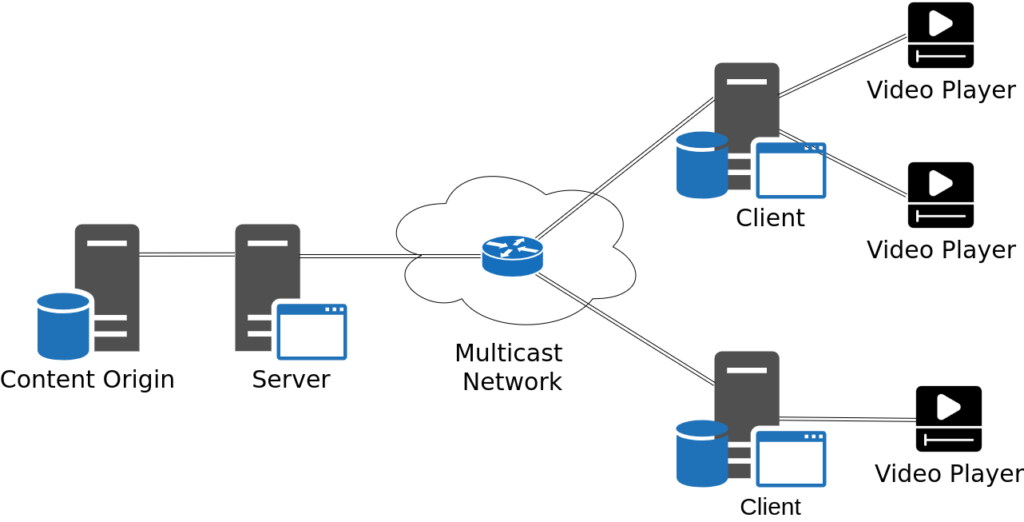

Coded caching and Multicast ABR share the same architecture: Content Origin, Multicast Server, IP Multicast Network, Multicast Client, Video Clients. To upgrade MulticastABR deployments to video-on-demand by Coded Caching, we need additional components:

- storage at the client

- computational power to encode/decode the data stream

The content origin and the Coded Caching server can be the same node, co-located, or separated. The same applies to the Coded Caching Client and the video client, such as a set-top box. Coded Caching can be used across all network hierarchies and supports all kinds of multicast networks, such as operator networks, broadband, satellite, or cellular (FeMBMS) networks.

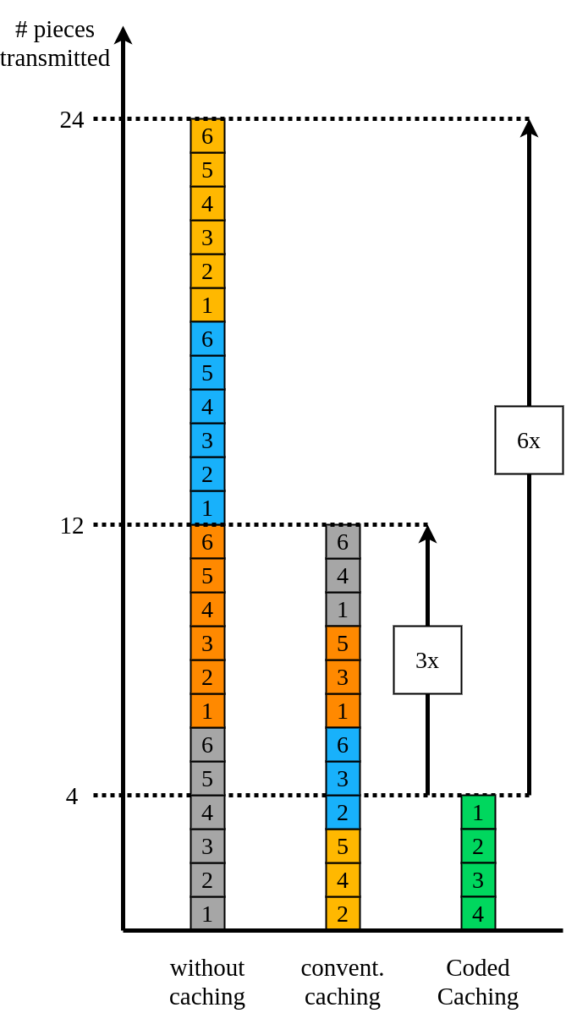

Potential gains are illustrated in the following case study.

Case Study – CDN Origin Server and Edge Nodes

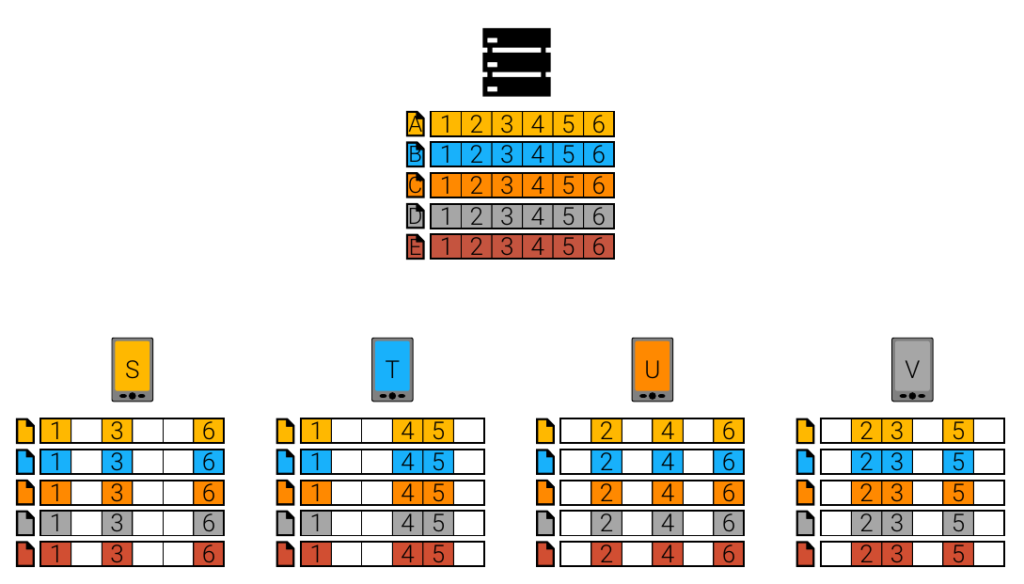

Coded Caching is the optimal tradeoff between storage size at the client and traffic emitted by the server. What does this mean? Let us illustrate this with an example scenario with the following parameters:

- C=50 Clients,

- F=200 titles in the video-on-demand library,

- and a request probability, such that a request of the most popular file is n times more likely than a request for the n-th most popular file.

Coded Caching outperforms popularity caching on the full trade-off curve. We highlight one operation point: Coded Caching reduces traffic by 66% by storing the equivalent of 5 titles only. As a comparison, caching the five most popular titles creates twice as much server traffic. Coded caching is exceptionally efficient with storage – to reduce traffic to the same level, popularity caching needs five times the storage.

Coded Caching benefits both the popular content and long-tail content. For each content title, Cadami’s Coded Caching algorithm selects a fraction of the title stored in the client cache. This fraction depends on the content’s popularity, and the so-called Coded Caching gain G. The server emits 1/G of the unicast traffic, and the storage size for this title on every client is a fraction of (G-1)/C of the file, where C is the number of clients. Conventional caching is G=∞, with the title stored completely and no traffic. G=1 implies no storage but 100% traffic when downloading the title. Let us explore a detailed breakdown of the highlighted operation point:

| Coded Caching Gain | ∞ | 3 | 2 | 1 | Total |

| Files (ranked by popularity) | 1 | 2-50 | 51-152 | 153-200 | 200 |

| Cache Storage per File | 100% | 4% | 2% | 0% | – |

| Total Storage in Files | 1 | 1.96 | 2.04 | 0 | 5 |

| Total Traffic (vs. No Caching) | 0 | 19.6% | 9.4% | 4.7% | 33.7% |

Coded Caching is the extension to video-on-demand services for Multicast ABR

Coded Caching converts network traffic generated by video-on-demand services to multicast. Coded caching and MulticastABR share a similar architecture. Coded Caching presents itself as an intuitive extension from linear live TV to video-on-demand services for MulticastABR. Coded Caching has proven itself in 300+ commercial deployments for video distribution in aircraft. Implementations are available for various operating systems, for example, Linux and Android, and hosting options, such as running servers in the cloud and clients on embedded hardware. Cadami is looking for partnerships with organizations engaged in Multicast ABR standardization and commercialization to discuss options for integration of Coded Caching. If you are interested in learning more about Coded Caching and engaging in a conversation, please get in touch.